.png)

Bullet Point Summary

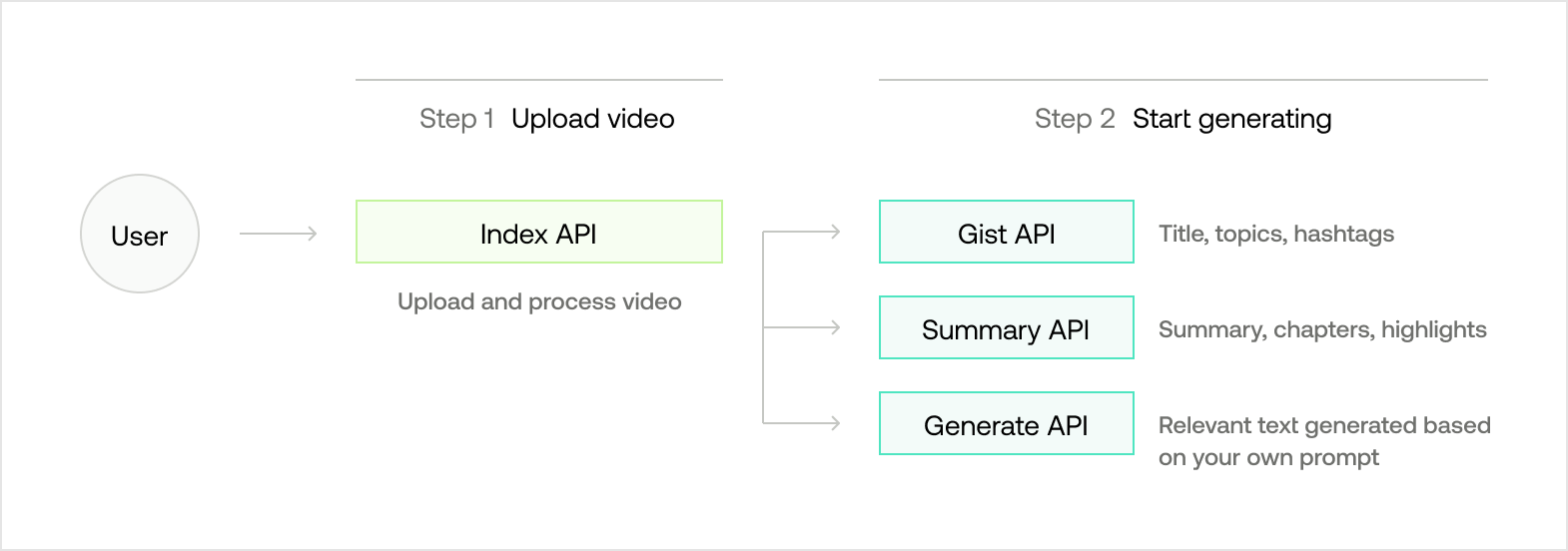

- Product: Twelve Labs is announcing their latest video-language foundation model Pegasus-1 along with a new suite of Video-to-Text APIs (Gist API, Summary API, Generate API).

- Product and Research Philosophy: Unlike many that reframe video understanding as an image or speech understanding problem, Twelve Labs adopts a “Video First” strategy with four core principles: Efficient Long-form Video Processing, Multimodal Understanding, Video-native Embeddings, Deep Alignment between Video and Language Embeddings

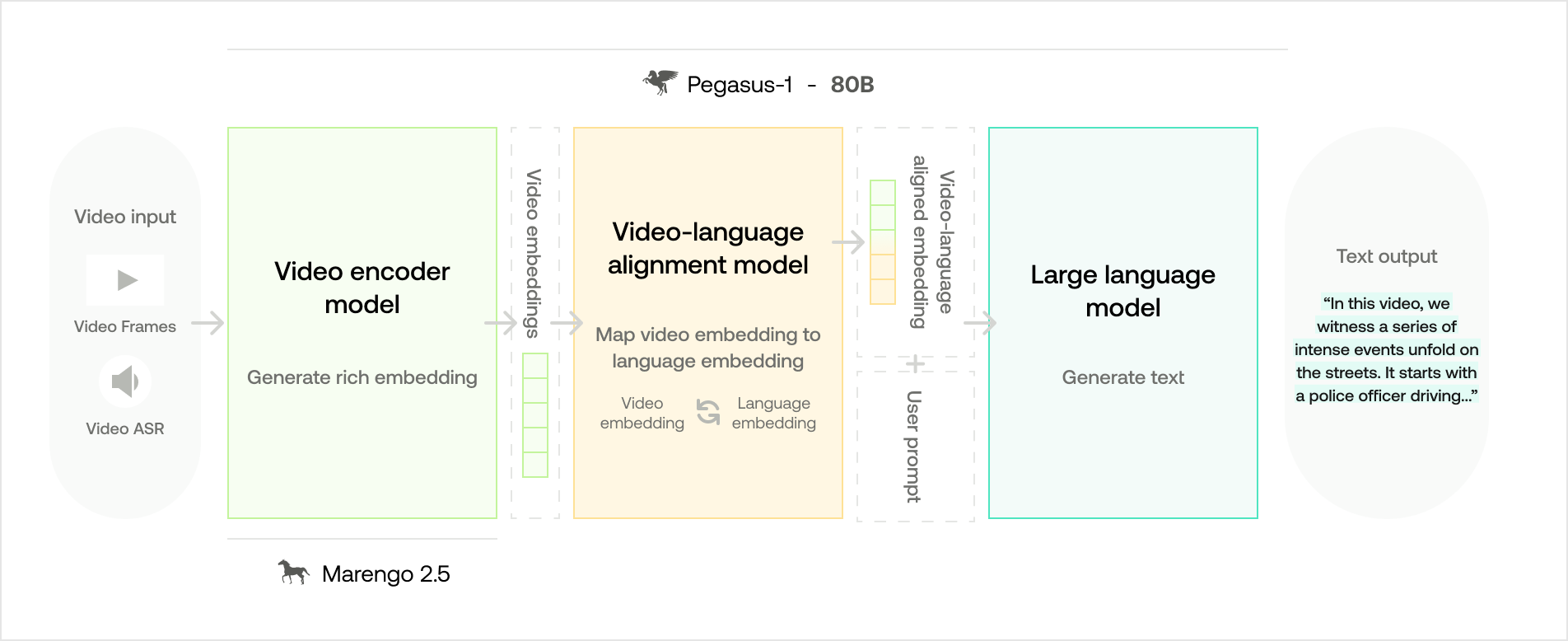

- The New Model: Pegasus-1 has approximately 80B parameters with three model components jointly trained together: video encoder, video-language alignment model, language decoder.

- Dataset: Twelve Labs has collected over 300 million diverse, carefully-curated video-text pairs, making it one of the largest video-text corpora there is for video-language foundation model training. This technical report is based on the initial training run conducted on a 10% subset consisting of 35M video-text pairs and over 1B image-text pairs.

- Performance against SOTA video-language model: Compared to the previous state-of-the-art (SOTA) video-language model, Pegasus-1 shows a 61% relative improvement on the MSR-VTT Dataset (Xu et al., 2016) and 47% enhancement on the Video Descriptions Dataset (Maaz et al., 2023) as measured by the QEFVC Quality Score (Maaz et al., 2023). Evaluating on VidFactScore (our proposed evaluation metric), it shows 20% absolute F1-score increase on the MSR-VTT Dataset and 14% enhancement on the Video Description dataset.

- Performance against ASR+LLM models: ASR+LLM is a widely-adopted approach for tackling video-to-text tasks. Compared to Whisper-ChatGPT (OpenAI) and a leading commercial ASR+LLM product, Pegasus-1 outperforms by 79% on MSR-VTT and 188% on the Video Descriptions dataset. Evaluating on VidFactScore-F1, it shows 25% absolute gains on the MSR-VTT Dataset and 33% on the Video Description dataset.

- API access to Pegasus-1: Here is the link for the waitlist for Pegasus-powered Video-to-Text APIs

Background

Expanding Our Research Horizon: Beyond Video Embedding to Generative Models

Twelve Labs, a San Francisco Bay Area-based AI research and product company, is at the forefront of multimodal video understanding. Today, we are thrilled to unveil the state-of-the-art video-to-text generation capabilities of Pegasus-1, our latest video-language foundation model. This represents our commitment to offer a comprehensive suite of APIs tailored for various downstream video understanding tasks. Our suite spans from natural language-based video moment retrieval to classification, and now, with the latest release, prompt-based video-to-text generation.

Our Video-First Ethos

Video data is intriguing as it contains multiple modalities within a single format. We believe that video understanding requires a novel take on marrying the intricacies of visual perception and the sequential and contextual nuances of audio and text. With the rise of capable image and language models, the dominant approach for video understanding has been to reframe it as an image or speech understanding problem. A typical framework would involve sampling frames from the video and inputting them into a vision-language model.

While this approach may be viable for short videos (which is why most vision-language models focus on < 1min video clips), most real-world videos exceed 1 minute and can easily extend to hours. Using a vanilla "image-first" approach on such videos would mean processing tens of thousands of images for each video, which result in having to manipulate on a vast number of image-text embeddings that loosely capture the semantics in spatiotemporal information at best. This is impractical in many applications in terms of performance, latency, and cost. Furthermore, the dominant methodology overlooks the multimodal nature of videos, wherein the joint analysis of both visual and auditory elements, including speech, is crucial for a comprehensive understanding of their content.

With the fundamental properties of video data in mind, Twelve Labs has adopted a “Video First” strategy, focusing our model, data, and ML systems solely to processing and understanding video data. This stands in contrast to the prevalent “Language/Image First” approach observed among many Generative AI players. Four central principles underscore our “Video First” ethos, guiding both the design of our video-language foundation models and the architecture of our ML system:

- Efficient Long-form Video Processing: Our model and system must be optimized to manage videos of diverse lengths, from brief 10-second clips to extensive multi-hour content.

- Multimodal Understanding: Our model must be able to synthesize visual, audio, and speech information.

- Video-native Embeddings: Instead of relying on image-native embeddings (e.g. CLIP) that focus on spatial relationships, we believe in the need for video-native embeddings that can incorporate spatiotemporal information of a video in a holistic manner.

- Deep Alignment Between Video-native Embeddings and Language Model: Beyond image-text alignment, our model must undergo deep video-language alignment through extensive training on large video-text corpora and video-text instruction datasets.

New Video-to-Text Capabilities and Interfaces

With a single API call, developers can prompt Pegasus-1 model to produce specific text outputs from their video data. Contrary to existing solutions that either utilizes speech-to-text conversions or rely solely on visual frame data, Pegasus-1 integrates visual, audio, and speech information to generate more holistic text from videos, achieving the new state-of-the-art performance in video summarization benchmarks. (See Evaluation and Results section below.)

The Gist and Summary APIs are pre-loaded with relevant prompts to work out of the box without needing user prompts. The Gist API can produce concise text outputs like titles, topics, and lists of relevant hashtags. The Summary API is designed to generate video summaries, chapters, and highlights. For customized outputs, the experimental Generate API allows users to prompt specific formats and styles, from bullet points to reports and even creative lyrics based on the content of the video.

Example 1: Generating a small report from a video through the Gist and Summary APIs.

Example 2: Generating a video summary by passing in a styling prompt to the Summary API.

Example 3: Generating a customized text output by prompting through the experimental Generate API.

Example 4: Demonstrating multimodal understanding that incorporates visual, speech, and auditory cues inside a video. (Highlighted in Green: Visual Information)

Pegasus-1 (80B) Model Overview

The Functions of the Constituent Models and the Overall Architecture

Pegasus-1 model is structured around three primary components, each tasked with generating video-native embeddings, video-language-aligned embeddings, and text outputs respectively.

1. Video Encoder Model - Derived from our existing Marengo embedding model

- Input: Video

- Output: Video Embeddings (incorporating visual, audio, and speech information)

- Function: The purpose of the video encoder is to glean intricate details from videos. It assesses frames and their temporal relationships to obtain relevant visual information while concurrently processing audio signals and speech information.

2. Video-language Alignment Model

- Input: Video Embeddings

- Output: Video-language-aligned Embeddings

- Function: The alignment model’s primary task is to bridge the video embeddings with the language model’s domain. This ensures that the language model interprets the video embeddings similarly how it comprehends text tokens.

3. Large Language Model - Decoder model

- Input: Video-language-aligned Embedding, User prompt

- Output: Text

- Function: Leveraging its extensive knowledge base, the language model interprets the aligned embeddings based on the input user prompts. It then decodes this information into coherent, human-readable text.

Model Parameters and Scale

The Pegasus-1 model has an aggregate of approximately 80B parameters. Detailed parameter distributions for individual components, including the size of the Marengo embedding model, are not disclosed at this time.

Training and Fine-tuning Datasets

- Training Data for Video-Language Foundation Model: From a collection of over 300M video-text pairs, we have processed and selected a 10% subset, consisting of 35M videos (termed TL-35M) and over 1B images. We believe this is sufficiently large as a first training run and will run the subsequent training on TL-100M. To the best of our knowledge, this is the largest video-text corpus there is that is carefully curated for training video-language foundation models. To support broader research efforts, we contemplate open-sourcing a smaller dataset. For expressions of interest, please contact research@twelvelabs.io.

- Fine-tuning Dataset: A high-quality video-to-text fine-tuning dataset is crucial for enhancing the instruction-following capability of the aforementioned video-language foundation model. Our selection criteria revolve around three primary aspects: diversity of domains, comprehensiveness and precision in text annotation. On average, the text annotation associated with each video in our dataset is twice as long as those in existing open datasets for videos of similar length. Furthermore, annotations undergo several rounds of verification and correction to ensure accuracy. While this approach elevates the unit annotation cost, we prioritized on maintaining high standards in the quality of the fine-tuning dataset over merely increasing its size, as the importance observed in the previous work (Zhou et al., 2023).

Factors Influencing Performance

As one would expect, the overall model performance is strongly correlated with that of each component. The degree of how much each constituent model influences the overall quality remains an open question. We will be conducting an extensive ablation study to have a better understanding and share our findings in the future.

- Video Encoder Model: Derived from our Marengo 2.5 model (March 2023, 100M+ Videos / 1B+ Images) which powers our current Search and Classify APIs, video encoder model achieves state-of-the-art results in embedding-based tasks such as video classification and retrieval. The depth of the information extractable from a video is inherently upper-bounded by the video encoder model. Further details on the Marengo model will be featured in an upcoming report with the next release of Marengo 2.6.

- Video-language Alignment Model: This model acquires its video-language alignment proficiency during foundation model training and instruction fine-tuning. The extent to which our language model can interface with video embeddings is demarcated by this alignment mechanism.

- Large Language Model (Decoder Model): Our language model’s capabilities are framed by its knowledge acquired during its training phase. The caliber of the resulting text output is governed by the model’s ability to synthesize its knowledge, user prompts, and the video-language-aligned embeddings.

Evaluation and Results

.svg)

Twelve Labs acknowledges the importance of ensuring the responsible deployment of advanced technologies, including our Pegasus-1 model. We are dedicated to developing a comprehensive and transparent dataset and evaluation framework that benchmarks all of our models across fine-grained categories including correctness, detail orientation, contextual understanding, safety, and helpfulness. We are in the process of developing metrics specifically for safety and helpfulness in video-language models, with results to be shared imminently. We are excited to present the preliminary findings in this blog with a more detailed report to be released in the future. The evaluation is based on the preview version of Pegasus-1.

Our evaluation codebase can be found here.

Comparison Models

We compare our model against three distinct model (or product) categories:

- Video-ChatGPT (Maaz et al., 2023): This open-source model is the current state-of-the-art video language model with a chat interface. The model processes video frames to capture visual events within a video. However, it does not utilize the conversational information within the video.

- Whisper + ChatGPT-3.5 (OpenAI): This combination is one of the few widely adopted approaches to video summarization. By leveraging the state-of-the-art speech-to-text and large language models, summaries are derived primarily from the video’s auditory content. A significant drawback is the oversight of valuable visual information within the video.

- Vendor A’s Summary API: Widely adopted commercial product for audio and video summary generation. Vendor A’s Summary API seems to be based only on transcription data and language models (akin to ASR+ChatGPT3.5) to output a video summary.

Datasets

MSR-VTT Dataset (Xu et al., 2016): MSR-VTT is a widely used video-caption dataset that assesses a model's ability to generate a description or caption for short video clips ranging from 10 to 40 seconds. Each video is annotated with 20 sentences by human annotators. To capture as much detail as possible, we use an LLM (ChatGPT) to merge the 20 individual captions into a single, dense description. Our evaluation is conducted on the JSFusion Test Split, which consists of 1,000 video clips.

Video-ChatGPT Video Descriptions Dataset (Maaz et al., 2023): Video-to-text evaluations predominantly use video captioning datasets datasets, such as MSR-VTT dataset (above). While these short video descriptions offer a point of reference, they fall short in evaluating text generation for long-form videos prevalent in real-world contexts. Given this, we conduct an additional evaluation on our model on Video-ChatGPT Video Description Dataset. This dataset includes 500 videos from ActivityNet, all accompanied by comprehensive human-annotated summaries. Unlike traditional captioning datasets, the videos in this dataset range from 30 seconds to several minutes, and each comes with a dense, 5-8 sentence summary encapsulating both visual and auditory elements.

Metrics

Following the Quantitative Evaluation Framework for Video-based Conversation Models (QEFVC)(Maaz et al., 2023), we evaluate the models on three areas: Correctness of Information, Detail Orientation, and Contextual Understanding. To do this, we ask an instruction-tuned language model (e.g. GPT-4) about each criterion with respect to the reference summary. To quantify overall performance, we average the three scores, defining it as QEFVC quality score.

While this evaluation metric offers a convenient means for comparison with existing models, they are not without challenges. Past works on language model evaluation have highlighted that solely relying on GPT-4 for model prediction scores is prone to inaccurate evaluation. It is also observed that making the evaluation as fine-grained as possible improves the consistency as well as the precision of the evaluation (Ye et al., 2023). With this in mind, and drawing inspiration from FActScore (Min et al., 2023), we introduce a refined evaluation method, VidFactScore (Video Factual Score) to evaluate the quality of video summaries in a much more fine-grained manner:

- For each video and reference summary pair, we break down the reference summary into discrete facts. As an example, “A man and a woman are running.” is segmented into “A man is running.”, and “A woman is running.”. This segmentation is done by an instruction-tuned language model, like GPT-4, with appropriate prompting.

- The model-generated summary undergoes a similar division.

- An ideal predicted summary should (1) encompass the majority of facts from the reference and (2) minimize the inclusion of facts absent in the reference. Determining the presence or absence of facts is achieved through an instruction-tuned language model with appropriate prompting.

- From a quantitative perspective, (1) corresponds to Recall Rate, which calculates the proportion of shared facts between prediction and reference to the total facts in the reference. (2) corresponds to Precision, which calculates the proportion of shared facts to the total facts in the prediction. The harmonic mean of the two numbers, F1, offers a straightforward metric for model comparison.

Results

In a comparison with the current state-of-the-art model (VideoChatGPT), Pegasus-1 exhibits a significant 61% relative improvement on the MSR-VTT dataset and 47% enhancement on the Video Description dataset as measured by the QEFVC Quality Score. Against the ASR+LLM model cohort (including models such as Whisper+ChatGPT and Vendor A), the performance gap widens with Pegasus-1 outperforming by 79% on MSR-VTT and 188% on the Video Description dataset.

-1%202.png)

-1.png)

Evaluating on our newly proposed VidFactScore-F1 metric, Pegasus-1 shows a 20% absolute increase on the MSR-VTT Dataset and 14% enhancement on the Video Descriptions dataset compared to VideoChatGPT. When benchmarked against the ASR+LLM model cohort, the gains are 25% on the MSR-VTT Dataset and 33% on the Video Descriptions dataset. These findings consistently suggest that VidFactScore aligns well and correlates strongly with evaluations based on the QEFVC framework.

An intriguing observation was made regarding videos predominantly reliant on speech, such as standup routines or lectures. Our model outperforms the ASR+LLM models even in these scenarios. Contrary to initial perceptions that ASR might suffice for such videos, our findings suggest otherwise. We postulate that even minimal visual cues (for instance, "a man performing standup comedy" or “a reaction video”) can enrich speech data, yielding more precise and encompassing summaries. Such outcomes underscore the notion that video comprehension surpasses mere speech understanding. It is clear that incorporating both visual and speech modalities is necessary to achieve a comprehensive understanding. See the “Reaction Video” in the “In-the-wild Examples” section below.

In-the-wild examples

These are sample examples that are randomly selected from diverse domains to illustrate the capabilities of Pegasus-1 compared to the existing approaches.

Note that the generated output may contain

- Hallucinations (creating a coherent story even though it is not factually depicted in the video)

- Irrelevant answers due to not being able to comprehend the prompt or question

- Biases

We welcome any feedback and will do our best to address them in the near future.

Generation Examples

Generated Title

The Revolution Unveiled: A Journey through Ideology, Excitement, and Innovation

Generated Topic

Action

Generated Hashtags

#information-purification-convictives, #garden-of-pure-ideology, #underground-tunnel, #guns, #live-event, #energy-and-excitement, #woman-in-white-attire, #animated-character, #martial-arts-skills, #dangerous-hallway, #armed-men, #Apple-Computer, #Macintosh, #revolutionary-potential

Generated Summary

The video takes us into the underground world, where a group of individuals move through dark tunnels, armed with guns, as they celebrate the first anniversary of the Information Purification Convictives. They have created a pure ideology, something unprecedented in history. The energy and excitement of a live event come alive as we see people engaged in various activities. A woman dressed in white leads the way, followed by others who join in, their enthusiasm palpable. A captivating display on a large screen captures the attention of the crowd, adding to the overall excitement. Amidst this chaos, we witness a skilled martial artist, the same woman in white, navigating a dangerous hallway while being pursued by armed men, showcasing her incredible combat skills. The scene is dramatically lit, creating a sense of tension and anticipation. The video concludes with the introduction of Apple's groundbreaking product, the Macintosh, promising a future that breaks away from the conformity of 1984. It is a mesmerizing journey into the underground revolution, a mix of ideology, thrilling action, and a glimpse of the future.

ChatGPT + Whisper (OpenAI): In this video, there is a celebration taking place for the first anniversary of the Information Purification Projects. The speaker talks about creating a garden of pure ideology, where workers are protected from conflicting rules. They mention that their unity is a stronger weapon than any fleet or army on Earth. The speaker declares that they are one people with one purpose and that their enemies will talk themselves to death as they bury them in their own confusion. The video ends with an announcement that on January 24th, Apple Computer will be introducing Macintosh, and it will be a revolutionary departure from the status quo of 1984. Overall, the video seems to highlight the power and unity of this organization or project, as well as a teaser for an upcoming product unveiling by Apple

Generated Chapters

Creating a Garden of Pure Ideology 00:00:00 - 00:00:15

The video begins with a group of individuals moving through an underground tunnel, armed with guns, as they celebrate the first anniversary of the Information Purification Convictives. They discuss the creation of a garden of pure ideology.

Energy and Excitement of a Live Event 00:00:15 - 00:00:30

The video captures the energy and excitement of a live event, with people engaged in various activities. A woman in white attire leads the way, followed by others who join in. Captivating images displayed on a large screen add to the overall excitement and entertainment.

Display of Incredible Combat Skills 00:00:30 - 00:00:45

A woman in white attire navigates a dangerous hallway while being pursued by armed men. She showcases her incredible martial arts skills in a dramatically lit area, captivating the viewer with her agility and combat techniques.

Introduction of Apple's Macintosh 00:00:45 - 00:01:00

The video concludes with the introduction of Apple's groundbreaking product, the Macintosh. This new technology promises a future that breaks away from the conformity of 1984, offering viewers a glimpse of the possibilities that lie ahead.

Generated Highlights

00:00:00 - 00:00:15

The video showcases a group of individuals moving through an underground tunnel, some of them armed with guns.

00:00:15 - 00:00:30

The video captures the energy and excitement of a live event, with people engaged in various activities.

00:00:30 - 00:00:45

The video showcases a woman in white attire who walks through a dangerous hallway while being pursued by armed men.

Generating Answers based on Custom Prompts

Prompt: What kind of ad is this?

Output: This ad is a promotional advertisement for Apple's Macintosh computer, highlighting its revolutionary potential in technology.

Prompt: Describe the main character in the video

Output: The main character in the video is a woman dressed in white attire. She is seen running through an underground tunnel and engaging in martial arts combat. She is also shown using a gun to fight against individuals wearing black armor.

Generated Title

Chaos in the Kitchen: A Journey of Restaurant Management and Food Review

Generated Topic

Restaurant Management

Generated Summary

In this video, we see a chaotic and hectic restaurant kitchen environment. The man in black attire watches various videos and reacts to the content, which includes interviews with staff members discussing their experiences in restaurant management. There are discussions about menu creativity, the importance of proper management, and the challenges faced in the kitchen. The video captures the frustrations of the staff, communication breakdowns, and the need for improvement in food quality. Despite the chaos, there are moments of success and teamwork. The video also highlights the presence of influential food bloggers and the impact of their reviews. Overall, the video showcases the challenges and triumphs of running a restaurant, with a mix of humor, frustration, and determination.

ChatGPT + Whisper (OpenAI): The video focuses on a restaurant called Down City in the center of town. Abby and her best friend Rico bought the restaurant but faced numerous challenges, including a lack of experience in managing a restaurant and a poorly designed menu. Gordon Ramsay visits the restaurant and expresses his disappointment with the food and the unsanitary state of the kitchen. He confronts Abby about her lack of cooking experience and her resistance to change. Ramsay decides to help them turn things around by implementing a new menu and providing guidance in the kitchen. The restaurant undergoes a transformation, leading to improved food quality and a positive response from a food blogger. However, despite the improvements, the restaurant eventually closes due to negative Yelp reviews and discontent from regular customers.

Generated Chapters

Introduction and Challenges in Restaurant Management 00:00:00 - 00:05:30

The video begins with a man reacting to a video featuring Gordon Ramsay and discussing its relevance for restaurant management. Various individuals discuss their experiences in owning and managing restaurants. The challenges and frustrations of running a restaurant are highlighted, including menu creativity and the importance of proper management.

Culinary Disasters and Communication Breakdowns 00:05:30 - 00:11:30

The video showcases chaotic and hectic scenes in a restaurant kitchen. The man in black attire reacts to various food-related videos and discusses the chaos in the kitchen. The importance of effective communication, consistency, and food quality are emphasized. Moments of frustration, humor, and determination are captured.

Facing Criticism and Seeking Improvement 00:11:30 - 00:15:30

The video focuses on the challenges faced by the restaurant staff and their interactions with influential food bloggers. The impact of online reviews and the need for improvement in food quality are discussed. The importance of embracing feedback, teamwork, and effective management is emphasized.

Redemption and Success in the Kitchen 00:15:30 - 00:21:30

The video shows the kitchen staff working together to overcome the chaos and improve the quality of their food. The man in black attire reacts to the success of the restaurant and the positive reviews. The importance of organization, communication, and teamwork in the kitchen is highlighted.

Conclusion and Reflection 00:21:30 - 00:24:05

The video concludes with the man reflecting on the events of the video and discussing the challenges faced by food reviewers. The importance of accurate follower counts and the impact of online reviews on restaurants are highlighted.

Generated Highlights

00:18:00 - 00:18:15

The chef struggles with a communication breakdown in the kitchen.

00:22:00 - 00:22:15

The kitchen regains control with Abby focused on the task at hand.

00:22:30 - 00:22:45

The kitchen experiences smooth sailing with Jimmy in control.

Generating Answers based on Custom Prompts

Prompt: What kind of clothing is the video reviewer wearing?

Output: The video reviewer is wearing a black t-shirt.

Prompt: Who is the main chef? (Rejection scenario)

Output: Based on the video information, it is not mentioned who the main chef is.

Comparison against existing models

Ours(Pegasus-1):

In this captivating video, we are taken on a virtual journey to the enchanting city of Paris. Through various websites and search terms, we are introduced to a plethora of study abroad opportunities available in this cosmopolitan hub. The video emphasizes the appeal of Paris for English speakers and expats, showcasing tailored information and search results that cater to their needs. As we delve deeper, we witness a person searching for job opportunities in Paris using Google, stumbling upon a treasure trove of relevant listings. The video concludes with a comprehensive guide on exploring opportunities in Paris, giving valuable tips on finding solutions and writing advertisements. This video truly captures the essence of the captivating city of Paris and the endless possibilities it holds for those seeking new horizons.

Video-ChatGPT:

The video shows a Google Chrome browser with a search bar and a search button. The search button is labeled \I'm Feeling Lucky\" and is located below the search bar. The video also shows a search result page with a list of search results.

OpenAI ChatGPT + Whisper:

In the video, someone named Silva is receiving a phone call. They answer the call by saying \"Allo?\" which is a common way of answering the phone in some French-speaking countries. Based on this short transcription, it appears that the video might involve a conversation or interaction involving Silva. However, without further information, it is difficult to determine the exact context or purpose of the video.

Ours (Pegasus-1):

In this animated video, a man hilariously shares his experiences working at a Subway sandwich shop and recounts humorous stories about customers. He mentions that about 80% of the customers were fine, but there were some who would annoy him with their requests, like asking for a meatball on flatbread or rushing through their order. The man also talks about the challenges of providing specific ingredients and receiving accurate information from customers. He shares some tricks he used while working at Subway, like recommending provolone cheese because it's easy to handle with gloves on. The video also includes funny moments, such as the man waiting for the receipt to print after a credit card transaction and customers expecting the manager to give them free stuff. The man admits to not strictly following the Subway formula and sometimes adding extra ingredients to customers' orders. He mentions incidents like customers not wanting to pay for their sandwiches and customers stealing from the store. Overall, the video portrays humorous encounters at a fast-food restaurant and highlights the importance of treating fast food workers with respect.

Video-ChatGPT:

The video shows a man in a white shirt and a black hat holding a sign with a picture of a sandwich on it. He is talking to the camera and pointing at the sign. The video also shows a man in a white shirt and a black hat holding a sign with a picture of a sandwich on it. He is talking to the camera and pointing at the sign.,

OpenAI ChatGPT + Whisper:

In this video, the narrator discusses their experiences working at Subway and shares some humorous and annoying encounters with customers. They talk about how some customers would ask for specific sandwich toppings but forget to specify crucial details, like the type of bread or cheese. The narrator also reveals some tricks they would use, such as recommending certain cheese types based on ease of handling. They also mention how the slow receipt printer caused awkward moments with customers. The narrator shares a story about pretending to be the manager when customers demanded to speak to one. They also talk about being more lenient with customers, occasionally disregarding Subway's rules, and giving some examples like adding extra toppings or charging less for certain items. The narrator then shares a couple of interesting customer encounters, including a customer who didn't want to pay for his sandwiches and a customer who kept asking for onions \"on the side\" without clarifying what that meant. They also mention a customer who tipped them generously and another who placed a large order without prior notice. The narrator jokes about a customer who requested extra lettuce to the point where the sandwich couldn't be closed. They also discuss some instances of theft by customers, including someone filling a water cup with soda and another person hiding stolen chips. The video wraps up with the narrator emphasizing the importance of treating fast food workers with respect and acknowledging their humanity

Ours (Pegasus-1):

In this video, we see a woman who is ready for a change in her appearance. She starts off by cutting her hair, revealing the shortest style she's ever had. The woman explains that after having the same look for a long time, she felt the need to reinvent herself. She then visits her friend James Mijoo, a talented colorist in Los Angeles, who turns her vision into reality. With her new haircut, the woman feels inspired to try bolder looks and shares her everyday makeup routine. She starts by prepping and conditioning her lips with a lip cushion. She has been experimenting with luminizing primers to give her skin a radiant glow, applying a dime-sized amount on her face. As she applies makeup, she spot treats with concealer and blends custom colors using a cushion sponge. Embracing her natural skin texture, she mattifies certain areas to create a flawless look. The woman then focuses on shaping and coloring her eyebrows, using microfluff and brunette products. She demonstrates blending custom colors and applying concealer to achieve a polished result. Moving on to eye makeup, she uses lip cloud and faded clementine shades as cream shadow on her lids, creating a base color and applying a deep brown eyeliner to create a soft shadow. She mentions using matte peach called Sunset instead of shimmers for a different effect. As she continues to apply makeup, she talks about using eyebrow pencils for grooming and shaping, as well as using a brush and mascara for her eyelashes. She highlights her cheeks and applies blush for a natural finish, mentioning the benefits of Heavenly from Divine Skies as a lip gloss. She concludes her everyday makeup look, emphasizing that it is minimal and easy to achieve. The woman expresses her excitement for trying bolder looks with her new haircut and invites viewers to follow her on Instagram. Throughout the video, there are advertisements for various makeup products and stickers she uses to enhance her look. The video closes with a positive message of reinventing one's look through hair, makeup, and confidence for a new decade. Overall, it's an inspiring and informative video that encourages viewers to embrace change and try new things in their own beauty routines.

Video-ChatGPT:

The video shows a woman applying makeup to her eyes, lips, and cheeks. She is wearing a white shirt and a black jacket. The video is set to a song, and the woman is seen smiling at the camera.

OpenAI ChatGPT + Whisper:

In this video, the person starts by talking about their new haircut, which is the shortest they've ever had. They mention going to their friend James, who is a talented colorist, and how they trusted him with their new look. They then transition into showing their everyday makeup routine. They start by prepping their lips with a lip cushion and then move on to using a luminizing primer to give their skin a glow. Since they don't use foundation, they spot treat with concealer and blend it out with a cushion sponge. They talk about embracing their natural skin texture and then move on to their eyebrows. They use a brow product to shape and fill in their brows, emphasizing the multi-use of products. They then use a lip product as a cream eyeshadow and layer a deep brown lip crayon as a base for their eyeliner. They mention using different eyeliners for each eye shape and finish off with adding a pop of color to their lower lash line. They use a versatile blush-highlighter product on their cheeks, eyes, forehead, and chin. They finish the look with a lip gloss that helps smooth out fine lines. They use a highlighter on their nose and apply mascara. They show the final look and express their excitement to try bolder looks with their new haircut. They mention an Instagram account that provides cute stickers and encourage viewers to check it out.\n\nOverall, the video is about the person's new haircut and their everyday minimal makeup routine. They show the step-by-step process and provide tips along the way. They also mention future content and promote an Instagram account for cute stickers

Ours(Pegasus-1):

Once the couple arrives at the campsite, they carefully choose the perfect spot in the picturesque autumn forest to set up their tent. They make sure to avoid uneven ground, roots, rocks, and hilly sites. After unpacking their tent bag, which includes the tent body, tent fly, poles, pegs, ground sheet, and guy lines, they begin setting up. They lay the ground sheet over the selected location, roll out the tent, and align the door in the desired position. With the tent poles assembled, they insert one end into the grommet on the corner of the tent body and secure them using clips. They raise the tent body by attaching the poles on opposite sides to the grommets. The process is made easier with two people. Once the tent is up, they secure it to the ground using nylon webbing straps and pegs, ensuring the floor is stretched tight. They then attach the tent fly, which will keep them dry in case of rain, and ensure it aligns with the entrance of the tent. With everything set up, they move their bedding and gear into the tent. Throughout the process, they maintain a smile on their faces, indicating their excitement for their camping trip. When it's time to leave, they take everything apart in reverse order, packing their gear back into the tent bag. Before departing, they do a final walk around the campsite, picking up any garbage and leaving the site in good condition for future visitors. The video ends with a message from the Government of Canada, encouraging viewers to join the Camping or Tent Setup program of Parks Canada and explore the great outdoors with their family.

Video-ChatGPT:

The video shows a group of people sitting around a fire in a forest. They are cooking food and drinking water. One person is seen putting a tarp on the ground. The video ends with the group sitting around the fire.

OpenAI ChatGPT + Whisper:

The video provides instructions on setting up a tent at a campsite. It advises viewers to choose a flat area without rocks or roots, away from the fire pit, and discusses the necessary equipment like poles and pegs. The video demonstrates the process of assembling the tent, attaching the tent fly, and securing the tent to the ground using pegs and nylon straps. It also emphasizes the importance of leaving the campsite clean and picking up any garbage before leaving. The video concludes with a message from the Government of Canada encouraging responsible camping and leaving no trace

Ours(Pegasus-1):

In this video, we witness the intense competition of the Olympic Men's 100m Final. A group of male athletes from various countries, including Jamaica, the United States, and the Netherlands, line up at the starting point on the track. The athletes showcase their remarkable speed and athleticism as they sprint towards the finish line. We see the dominant performance of Usain Bolt, who breaks the world record and wins the race with a time of 9.63 seconds. The video captures Bolt's incredible running technique and his ability to pull away from the other competitors. The crowd cheers and celebrates as Bolt becomes the fastest man on the planet once again. Overall, the focus of the video is on the impressive running skills and performance of these exceptional athletes in the Men's 100m Final.

VideoChat-GPT:

The video shows a man running on a track and jumping over a hurdle. He is wearing a red shirt and is surrounded by a crowd of people. The video also shows a man in a red shirt running on a track and jumping over a hurdle.

OpenAI ChatGPT + Whisper:

The video is a recording of the men's 100 meters final at the Olympic Games. The commentator gives a description of the race and provides information on each athlete in the lineup. Usain Bolt, the defending champion and world record holder, is featured prominently. The race begins and Bolt starts slightly slower than Justin Gatlin, but quickly catches up and sprints ahead of the competition. He crosses the finish line in first place, setting a new Olympic record of 9.63 seconds. Johan Blake finishes in second place, and Justin Gatlin takes the bronze medal. The commentator praises Bolt's performance and highlights his overwhelming dominance in the race. Bolt's victory marks his second consecutive Olympic gold medal in the 100 meters, solidifying his status as one of the greatest sprinters of all time. The video concludes with celebrations and interviews with the athletes. Additionally, the commentator briefly mentions another event, the men's hammer throw.

Ours (Pegasus-1):

In this Thanksgiving cooking video, we are introduced to two women who are excitedly preparing a delicious feast. They start by heading over to the kitchen and getting ready for the cooking madness. The first dish they make involves cutting bananas in half and placing them on baking sheets before roasting them in the oven. Then, they move on to chopping onions and cooking them in the oven. The aroma of the onions fills the air as they expertly handle the ingredients. Next, they cook mushrooms in a pot, commenting on how they resemble eggplants. As the cooking progresses, they mash up the roasted bananas and mix them with cranberries for a banana bread batter. They then demonstrate how to mix apples and eggs, creating a creamy and flavorful mixture. Throughout the video, they add ingredients like soy sauce and Better Than Bouillon to enhance the flavors of various dishes. The process of folding in green beans and mixing them with onions and garlic is shown in detail. Additionally, they prepare turkey meat by seasoning it with spices like rosemary, thyme, and lemon. The turkey is cooked to perfection with massage butter applied under the skin. The duo also cooks chicken wings with mashed potatoes, adding ginger and preparing a sauce for sweet potatoes. They use their culinary skills to create savory gravies by utilizing the drippings from the cooked meats. The final result of their hard work is a mouthwatering Thanksgiving spread, complete with chicken and turkey dishes, yams, cornbread with cranberry sauce, and a variety of other tasty meals. As they enjoy the feast with their loved ones, the atmosphere is filled with joy and gratitude. The video showcases not only the process of cooking but also the celebration of food and the pleasures of gathering around the table to share a delicious meal with friends and family.

Video-ChatGPT:

The video shows a woman cooking a dish in a kitchen. She is seen cutting up vegetables and cooking them in a pan. The video also shows her stirring the dish and adding ingredients to it. The dish appears to be a soup, and the woman is seen stirring it with a spoon.

OpenAI ChatGPT + Whisper:

In this video, the narrator begins by introducing that it is Thanksgiving morning and they will be cooking with someone named Christine. They start by making banana bread with overripe bananas that have been roasted. They then chop onions and mushrooms for a dish, and mention that the mushrooms look like eggplants. The roasted bananas are mashed and added to the banana bread batter. Next, they add cranberries to the mixture. The video then shows them adding soy sauce to a green bean casserole for added flavor. The narrator also shows a turkey, seasons it with rosemary, thyme, and lemon, and massages butter under the skin for a juicy turkey. The turkey is then placed in the oven. Moving on, they prepare a mushroom gravy for the green bean casserole and add milk and flour to thicken it. They demonstrate using an Instant Pot to cook gizzards quickly. The video continues to showcase the preparation of various dishes, such as sweet potato sauce, chicken gravy, turkey gravy, and beef gravy. Velveeta cheese is added to one of the dishes for extra flavor. The narrator's mother helps with some of the preparation. The video ends with a shot of the finished Thanksgiving spread, including turkey, green bean casserole, mac and cheese, stuffing, cornbread, and cranberry sauce

Limitations and Best Usage Guideline

Video Duration: Our API supports videos ranging from 15 seconds to 1 hour in length (30 minutes for the Preview release), with plans to expand this to span from 4 seconds to 2 hours. For now, we recommend videos that fall within the 5 to 15-minute range.

Types of Video Content-: While Pegasus-1 was trained on diverse videos, we recommend using it on videos with a consistent theme or topic. For example, a video compilation of unrelated segments might disrupt the model’s ability to generate a consistent summary.

Hallucinations: Pegasus-1 shows promising initial results and offers a new paradigm of tackling video understanding tasks. However, it can occasionally produce inaccurate outputs. Its reliability remains an area for improvement. Efforts are underway to address hallucinations and we expect significant progress in the near future.

Safety & Biases: Despite our diligent efforts in data selection and cleaning, the possibility of NSFW content and unintentional biases persists in the model. Our understanding of ethical and safety measures for video foundation models is ongoing. As we continue testing and gather feedback, a detailed evaluation and ethics report will be made available.

Chat Capability: Pegasus-1 is engineered mainly for extracting factual information from videos, not for general-purpose video chats. Our experimental Generate API accepts user prompts and offers limited chat functionality, though it isn't specifically tailored for this purpose.

Semantic Reliability: Pegasus-1 may generate completely different output even if the given prompts are semantically similar. We acknowledge the issue and will be mitigating this in the future works.

Closing Remarks

Twelve Labs is proud to introduce Pegasus-1. This reflects our commitment to advancing the field of multimodal video understanding. Our video-language foundation model offers a pioneering approach to generative tasks related to video content. It is a meaningful first step towards achieving our mission of making videos just as easy as text.

We are a group of friendly, curious, and passionate people from all walks of life with a vision of driving the technological singularity for the betterment of humanity.

More coming soon.

Twelve Labs Team

This is a joint team effort across multiple functional groups including product, engineering, science, and business development. (First-name alphabetical order)

Product & Design: Andrei Popescu, Anthony Giuliani, EK Yoon, James Le, Jenna Kang, Soyoung Lee, Sue Kim (intern), Sunny Nguyen, Travis Couture

Engineering: Abraham Jo, Ed Park, Esther Kim, Kevin Han, Noah Seo, Ryan Won, SJ Kim, Tony Moon

Model & Data: Aiden Lee, Daniel Kim, Jae Lee, Jay Suh, Jay Yi, Jeff Kim, Kael Lee (intern), Laine Kong (intern), Lucas Lee, Mars Ha, Minjoon Seo, Ray Jung, Shane Hong (intern), William Go

Operations & Support: Dave Chung, Hans Yoon, Jenny Ahn

Resources

- Link for a preview access request to Generate API

- Link for our evaluation codebase

- Link to the API documentation

If you'd like to cite this post in your work, please use the following BibTeX citation:

Related articles

A beginner guide to video understanding for M&E with MASV and Twelve Labs

"Generate titles and hashtags" app can whip up a snazzy topic, a catchy title, and some trending hashtags for any video you fancy.

A Twelve Labs and MindsDB Tutorial

Our video-language foundation model, Pegasus-1. gets an upgrade!