This tutorial is co-authored with Daniel Gural - an ML evangelist at Voxel51.

Videos are the source to mountains of data, all packed into a small payload. Unlike any single modality, videos are full of image and audio data, all while having to consider the changes over time. Data scientists have been studying for years on what is the best way to unpack all of this and understand just what exactly is in our videos.

With new age brings new challenges. Many data scientists and machine learning engineers are faced with terabytes of videos, tasked at selecting only the ones critical to train your model. A once daunting task is now made simple with the newest in video understanding, video semantic search.

In this tutorial, you will learn how to build a FiftyOne plugin that harnesses the power of Twelve Labs Video Semantic Search, enabling you to create fast and repeatable search workflows. Whether you are a seasoned developer looking to leverage the Twelve Labs API or an enthusiast eager to explore the capabilities of the FiftyOne open-source tool, this tutorial will equip you with the knowledge and skills to seamlessly integrate Video Semantic Search into your projects.

You can find the finished result of the tutorial on the Semantic Video Search Plugin GitHub page. You can also find a video of this walkthrough on YouTube on the Twelve Labs page here as well!

Getting Started

Before we get started, let's go over what you will need to build the plugin. You will need:

- A Twelve Labs Account: Twelve Labs builds powerful foundation models for video understanding that enable downstream tasks such as semantic search using natural language queries, zero-shot classification, and text generation from video content. Join for free if you haven't already!

- A FiftyOne GitHub cloned repository: FiftyOne is an open-source tool for building high-quality datasets and computer vision models - maintained by Voxel51.

- A FiftyOne Plugins cloned repository: FiftyOne provides a powerful plugin framework that allows for extending and customizing the functionality of the tool. You want to install the FiftyOne Plugins Management and Development plugin, which contains utilities for managing your FiftyOne plugins and building new ones.

- A video dataset.

Once you have the required materials, we can dive in on how to get started! First, let's load our data in FiftyOne and test that our Twelve Labs API is configured correctly.

I will be using the quickstart-video dataset from FiftyOne as our dataset. It is easy and quick to spin up:

Next, make sure you have defined your Twelve Labs API Key and API URL that you are going to be using. We can do a simple test to see if it is configured correctly but retrieving any existing indexes that we have today. First let's set our key and url to environment variables:

Or

Next we will use the Twelve Labs API to check if we have any existing indexes and that our variables are configured correctly.

Make sure that your response is giving back a successful message and not a failure!

Performing Video Semantic Search

After a successful connection, we can break down the necessary steps that we want our plugin to achieve. There are 3 main goals we want to accomplish:

- Create an index

- Index our dataset

- Search our dataset

We will combine goal one and two into one operator and create a second operator for search. Lets try in just a jupyter notebook first doing the whole flow start to finish. Find more information at the FiftyOne docs or Twelve Labs docs.

1 - Create an index called “VideoSearch”

Our index will be able to search along visual, text_in_video, or logos. Twelve Labs also offers conversations as well if your videos have audio.

2 - Index our dataset

We need to check if our sample is greater than 4 seconds to meet Twelve Labs requirements. For each video, we push it to Twelve Labs and wait for a confirmation back before going to the next.

3 - Perform search queries of your dataset

For example, I searched for “sunny day” and got the search results that match this query.

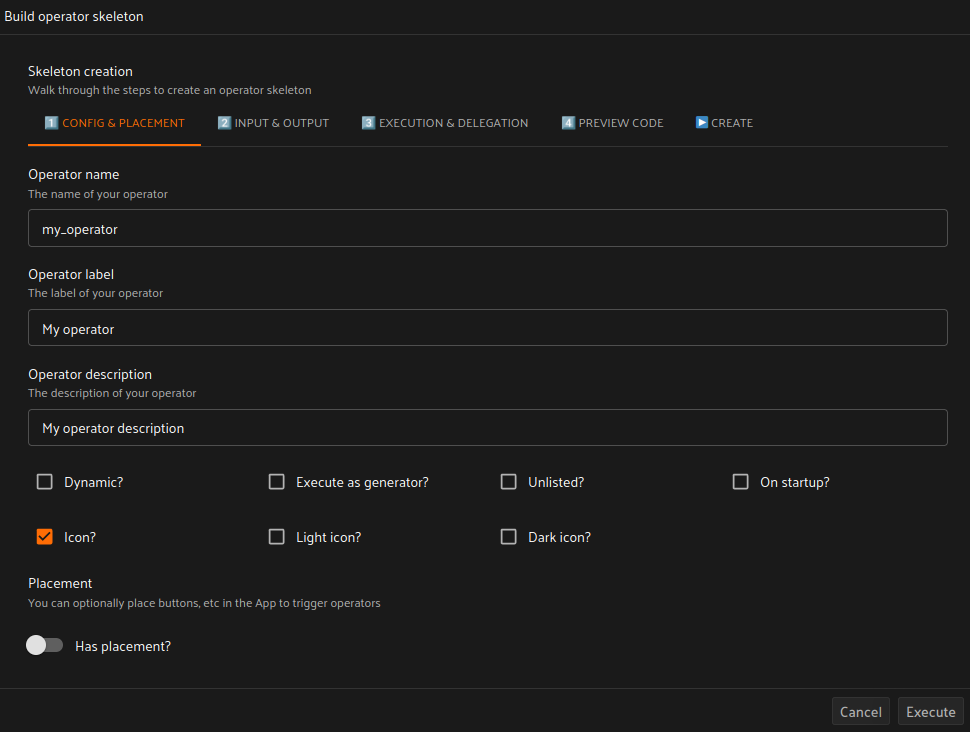

Once our workflow has been tested and verified, it's time to put it into a FiftyOne plugin! We will build the FiftyOne skeleton operator to help us flesh out our starting code. Luckily, using the FiftyOne Management & Development Plugin comes to help! We can can all the custom code blocks with help of the `build operator skeleton` operator!

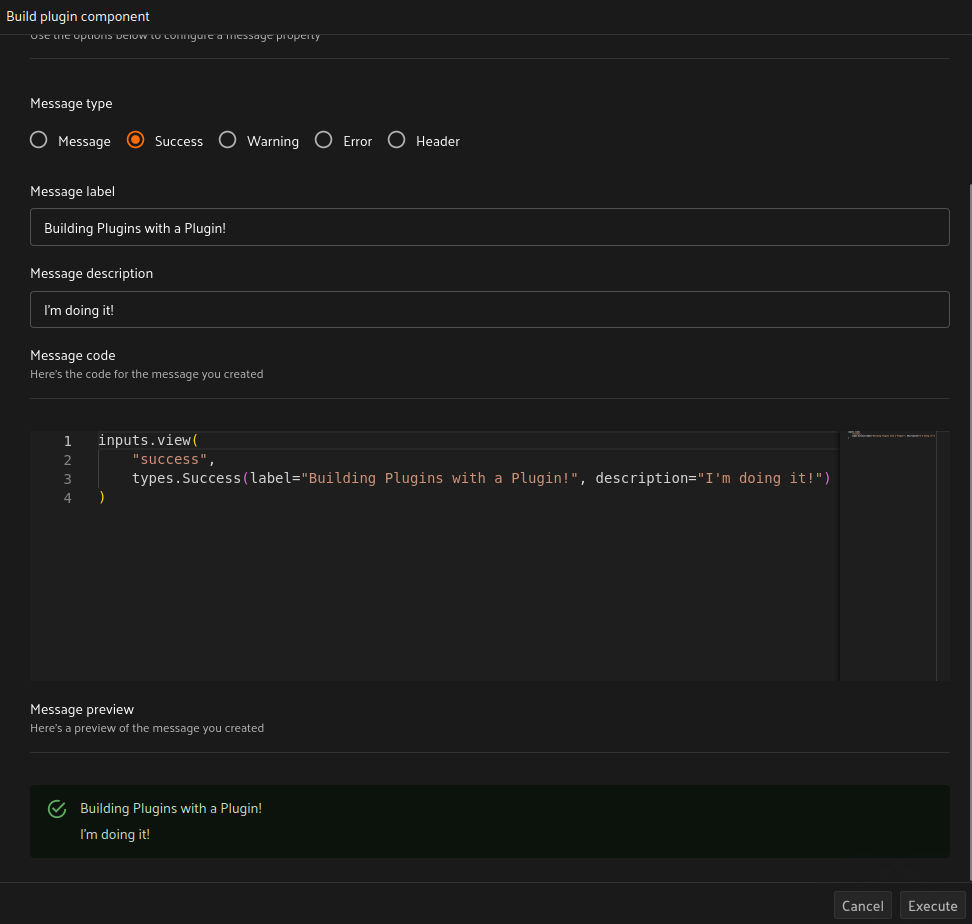

After we have a starting point for building our plugin, we leverage the `build plugin component` operator as well to help us build in the rest of our input fields and messages. This is a great and easy way to explore what UI modules you want to add to our plugin. All the code is given to you in Python and it is easy to tweak and explore exactly what you would like to include.

With our skeleton code complete and our Twelve Labs workflow defined, the last step is to put it all together! We drop in the appropriate code into our two operators, save, refresh our app and test our plugin!

Conclusion

By leveraging Twelve Labs' Video Semantic Search, developers can unlock a wide range of applications across various industries, including social media, brand insights, podcasts, and user-generated content. This powerful tool enables efficient video analysis and the retrieval of video content in context, making it invaluable for educational content search, enterprise knowledge management, and video editing suite platforms.

Twelve Labs is a pioneering startup that specializes in building foundation models for video understanding. Our latest video-language foundation model, Pegasus-1, and the suite of video-to-text APIs offer cutting-edge solutions for holistically understanding video data. On the other hand, Voxel51 is the company behind FiftyOne, an open-source tool that provides a powerful platform for data exploration and visualization, making it an ideal companion for developers seeking to integrate Twelve Labs' Video Semantic Search into their projects.

What's Next?

- Check out the Quickstart tutorial, and begin building amazing apps with Twelve Labs.

- Try out our Playground. Default video credits are 10 hours.

- Follow us on X (Twitter) and LinkedIn.

- Join our Discord community to connect with fellow users and developers.

Generation Examples

Comparison against existing models

Related articles

"Generate titles and hashtags" app can whip up a snazzy topic, a catchy title, and some trending hashtags for any video you fancy.

A Twelve Labs and MindsDB Tutorial

This blog post introduces Marengo-2.6, a new state-of-the-art multimodal embedding model capable of performing any-to-any search tasks.

"Summarize a Youtube Video" app gets your back when you need a speedy text summary of any video in your sights.